Abstract

Machine learning (ML) holds great promise for impacting healthcare delivery; however, to date most methods are tested in ‘simulated’ environments that cannot recapitulate factors influencing real-world clinical practice. We prospectively deployed and evaluated a random forest algorithm for therapeutic curative-intent radiation therapy (RT) treatment planning for prostate cancer in a blinded, head-to-head study with full integration into the clinical workflow. ML- and human-generated RT treatment plans were directly compared in a retrospective simulation with retesting (n = 50) and a prospective clinical deployment (n = 50) phase. Consistently throughout the study phases, treating physicians assessed ML- and human-generated RT treatment plans in a blinded manner following a priori defined standardized criteria and peer review processes, with the selected RT plan in the prospective phase delivered for patient treatment. Overall, 89% of ML-generated RT plans were considered clinically acceptable and 72% were selected over human-generated RT plans in head-to-head comparisons. RT planning using ML reduced the median time required for the entire RT planning process by 60.1% (118 to 47 h). While ML RT plan acceptability remained stable between the simulation and deployment phases (92 versus 86%), the number of ML RT plans selected for treatment was significantly reduced (83 versus 61%, respectively). These findings highlight that retrospective or simulated evaluation of ML methods, even under expert blinded review, may not be representative of algorithm acceptance in a real-world clinical setting when patient care is at stake.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Deidentified blinded RT plan review raw data used to conduct the retrospective and prospective analyses have been included in the original submission and can be made available upon request to T.G.P. Requests for the raw images and associated Digital Imaging and Communications in Medicine data used to train the model should be directed to T.G.P.

References

Liu, X. et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis. Lancet Digit. Health 1, e271–e297 (2019).

Nagendran, M. et al. Artificial intelligence versus clinicians: systematic review of design, reporting standards, and claims of deep learning studies. Brit. Med. J. 368, m689 (2020).

Shilo, S., Rossman, H. & Segal, E. Axes of a revolution: challenges and promises of big data in healthcare. Nat. Med. 26, 29–38 (2020).

McKinney, S. M. et al. International evaluation of an AI system for breast cancer screening. Nature 577, 89–94 (2020).

Ardila, D. et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat. Med. 25, 954–961 (2019).

Esteva, A. et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 542, 115–118 (2017).

Hannun, A. Y. et al. Cardiologist-level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nat. Med. 25, 65–69 (2019).

Hyland, S. L. et al. Early prediction of circulatory failure in the intensive care unit using machine learning. Nat. Med. 26, 364–373 (2020).

Hollon, T. C. et al. Near real-time intraoperative brain tumor diagnosis using stimulated Raman histology and deep neural networks. Nat. Med. 26, 52–58 (2020).

McCarroll, R. E. et al. Retrospective validation and clinical implementation of automated contouring of organs at risk in the head and neck: a step toward automated radiation treatment planning for low- and middle-income countries. J. Glob. Oncol. 4, 1–11 (2018).

Wijnberge, M. et al. Effect of a machine learning-derived early warning system for intraoperative hypotension vs standard care on depth and duration of intraoperative hypotension during elective noncardiac surgery: the HYPE randomized clinical trial. J. Am. Med. Assoc. 323, 1052–1060 (2020).

Nimri, R. et al. Insulin dose optimization using an automated artificial intelligence-based decision support system in youths with type 1 diabetes. Nat. Med. 26, 1380–1384 (2020).

Topol, E. J. High-performance medicine: the convergence of human and artificial intelligence. Nat. Med. 25, 44–56 (2019).

Wiens, J. et al. Do no harm: a roadmap for responsible machine learning for health care. Nat. Med. 25, 1337–1340 (2019).

Hong, J. C. et al. System for high-intensity evaluation during radiation therapy (SHIELD-RT): a prospective randomized study of machine learning-directed clinical evaluations during radiation and chemoradiation. J. Clin. Oncol. 38, 3652–3661 (2020).

Challener, D. W., Prokop, L. J. & Abu-Saleh, O. The proliferation of reports on clinical scoring systems. J. Am. Med. Assoc. 321, 2405–2406 (2019).

Angus, D. C. Randomized clinical trials of artificial intelligence. J. Am. Med. Assoc. 323, 1043–1045 (2020).

Challen, R. et al. Artificial intelligence, bias and clinical safety. BMJ Qual. Saf. 28, 231–237 (2019).

Parikh, R. B., Teeple, S. & Navathe, A. S. Addressing bias in artificial intelligence in health care. J. Am. Med. Assoc. 322, 2377 (2019).

Komorowski, M., Celi, L. A., Badawi, O., Gordon, A. C. & Faisal, A. A. The artificial intelligence clinician learns optimal treatment strategies for sepsis in intensive care. Nat. Med. 24, 1716–1720 (2018).

He, J. et al. The practical implementation of artificial intelligence technologies in medicine. Nat. Med. 25, 30–36 (2019).

Holzinger, A., Langs, G., Denk, H., Zatloukal, K. & Müller, H. Causability and explainability of artificial intelligence in medicine. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 9, e1312 (2019).

Gaube, S. et al. Do as AI say: susceptibility in deployment of clinical decision-aids. NPJ Digit. Med. 4, 31 (2021).

Cornell, M. et al. Noninferiority study of automated knowledge-based planning versus human-driven optimization across multiple disease sites. Int. J. Radiat. Oncol. Biol. Phys. 106, 430–439 (2020).

Peters, L. J. et al. Critical impact of radiotherapy protocol compliance and quality in the treatment of advanced head and neck cancer: results from TROG 02.02. J. Clin. Oncol. 28, 2996–3001 (2010).

Abrams, R. A. et al. Failure to adhere to protocol specified radiation therapy guidelines was associated with decreased survival in RTOG 9704—a phase III trial of adjuvant chemotherapy and chemoradiotherapy for patients with resected adenocarcinoma of the pancreas. Int. J. Radiat. Oncol. Biol. Phys. 82, 809–816 (2012).

Jaffray, D. A. et al. Global Task Force on Radiotherapy for Cancer Control. Lancet Oncol. 16, P1144–P1146 (2015).

McIntosh, C. & Purdie, T. G. Voxel-based dose prediction with multi-patient atlas selection for automated radiotherapy treatment planning. Phys. Med. Biol. 62, 415–431 (2017).

McIntosh, C. & Purdie, T. G. Contextual atlas regression forests: multiple-atlas-based automated dose prediction in radiation therapy. IEEE Trans. Med. Imaging 35, 1000–1012 (2016).

McIntosh, C., Welch, M., McNiven, A., Jaffray, D. A. & Purdie, T. G. Fully automated treatment planning for head and neck radiotherapy using a voxel-based dose prediction and dose mimicking method. Phys. Med. Biol. 62, 5926–5944 (2017).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Babier, A., Mahmood, R., McNiven, A. L., Diamant, A. & Chan, T. C. Knowledge-based automated planning with three-dimensional generative adversarial networks. Med. Phys. 47, 297–306 (2020).

Kiser, K. J., Fuller, C. D. & Reed, V. K. Artificial intelligence in radiation oncology treatment planning: a brief overview. J. Med. Artif. Intell. 2, 9 (2019).

Siddique, S. & Chow, J. C. Artificial intelligence in radiotherapy. Rep. Pract. Oncol. Radiother. 25, 656–666 (2020).

Liu, X. et al. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. Nat. Med. 26, 1364–1374 (2020).

Schuirmann, D. J. A comparison of the two one-sided tests procedure and the power approach for assessing the equivalence of average bioavailability. J. Pharmacokinet. Biopharm. 15, 657–680 (1987).

Acknowledgements

This work was supported by the Canadian Institutes of Health Research (funding grant no. 381340, to T.G.P. and C.M.) through the Collaborative Health Research Projects (National Sciences and Engineering Research Council of Canada partnered) and the Princess Margaret Cancer Foundation (to T.G.P., C.M. and A.B.). We thank M. Lupien and M. Mamdani for their critical reading of the manuscript and insightful comments in improving this work.

Author information

Authors and Affiliations

Contributions

C.M., L.C., T.G.P. and A.B. designed the study and assisted in data analysis and writing the article. A.B. and L.C. facilitated and oversaw the clinical reviews and data analysis. L.C. collected data and performed statistical analysis. M.C.T. contributed to study design and data collection. T.C., A.B., C.C., M.G., J.H., N.I., S.R., P.W., P.C. and A.B. contributed to the acquisition, analysis and expert review of RT plans. V.K. and T.L. contributed to data acquisition.

Corresponding authors

Ethics declarations

Competing interests

C.M. and T.G.P. receive royalties from RaySearch Laboratories in relation to ML RT treatment planning. The remaining authors report no competing interests.

Additional information

Peer review information Nature Medicine thanks Julian Hong, Xiaoxuan Liu and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Javier Carmona was the primary editor on this article and managed its editorial process and peer review in collaboration with the rest of the editorial team.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Machine learning model training and ML RT treatment planning.

The ML model training pipeline consists of four phases: (i) Feature Extraction: relevant features from the CT images and delineated anatomy are extracted; (ii) Atlas Regression Forest (ARF) training: one ARF for each patient in the training database is trained; (iii) Accuracy Estimation: a cross-validation that measures the accuracy of each ARF in predicting dose for all patients in the training database; (iv) Atlas Selection Learning: trains a Random Forest model to predict ARF accuracy for each training patient as a function of differences between observed feature distributions. ML RT treatment planning for a novel patient follows an analogous path in five steps: (i) Feature Extraction; (ii) Atlas Selection: uses the trained selection model to find the best atlases; (iii) Probabilistic Dose Prediction: estimates the probability of different dose values per voxel across the entire image set; (iv) Conditional Random Field Spatial Dose Optimization: infers the dose-per-voxel that fits a predicted overall distribution of dose;(v) Dose Mimicking Optimization: creates a physically deliverable treatment from the prediction.

Extended Data Fig. 2 RT treatment plan evaluation schema for study.

a, Study design: 100 consecutive eligible prostate cancer patients were included in the study. The first 50 patients (simulation phase) were treated using standard human-generated RT plans. ML-generated RT plans were compared retrospectively to the human-generated RT plan by the corresponding treating physician under blinded review. For the following 50 patients (deployment phase), ML- and human-generated RT plans were reviewed upfront, and the treating physician selected the RT plan under blinded review that was used for patient treatment (ML or human). The ML treatment planning strategy was not applicable for 3/50 patients and 7/50 patients for the simulation and deployment cohorts, respectively. b, Simulation phase RT plan evaluation results and c, Deployment phase RT plan evaluation results are shown. During blinded plan review and direct head-to-head comparison treating physicians were asked to use their clinical judgement to select the most clinically appropriate RT plan (‘Selected’) and which RT plan they believed they were selecting (‘Perceived’). An independent consensus review determined the RT plan with quantitative metrics most consistent with the established clinical practice guidelines (‘Quantitatively Superior’) (Extended Data Figs. 5; and 6).

Extended Data Fig. 3 Feasibility study results.

a, The proof-of-concept feasibility phase of the study was conducted to assess the clinical applicability of the ML model following technical validation. Blinded reviews of ML- and human-generated RT plans were done by three expert reviewers (n = 17 cases, 51 reviews). The reviewers evaluated both quantitative and qualitative metrics as well as overall clinical acceptability for each pair of RT plans independently. Overall, the number of clinically acceptable ML- and human-generated RT plans was equivalent, with ML RT plans typically demonstrating better target coverage and organ sparing compared with human RT plans. Qualitative metrics (dose conformity to target, dose falloff towards rectum, dose symmetry laterally) were used to evaluate the spatial appearance of RT plans. Human RT plans performed better on isolated qualitative metrics, according to the reviewers. b, The reviewers also conducted a head-to-head comparison of the ML- and human-generated RT plans for each patient. Across all reviews, ML RT plans were deemed superior in 38 reviews (74.5%), equivalent in 3 (5.9%), and inferior in 10 (19.6%). In 15 of 17 (88.2%) cases the ML RT plans were rated as equivalent or superior to the human RT plan by majority rule across the three reviewers. Based on these results, all genitourinary treating physicians at our institution agreed to transition the entire genitourinary site group to the simulation and deployment phases for clinical validation.

Extended Data Fig. 4 Blinded re-test simulation phase results.

Re-test of clinical judgement (selected RT plan) for the ML applicable cases in the simulation phase (n = 47) per treating physician. Four physicians did not change any selected plans on second review, two treating physicians (2 and 6) changed one selected RT plan from human to ML, and one treating physician (3) changed two selected RT plans from ML to human. As a result, there was no net change in RT plans selected across all treating physicians for the simulation evaluations, demonstrating high observer judgement reproducibility with re-test.

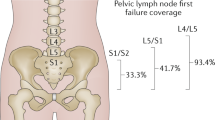

Extended Data Fig. 5 Metrics for quantitative RT treatment plan consensus review.

Clinical practice guidelines include quantitative RT treatment plan objectives represented by upper and lower bounds for treating targets (circles) and upper bounds where dose is to be minimized to spare organs (down arrows) to be achieved for all RT treatment plans. For example, the objectives specify that 99% of the Planning Target Volume is to receive greater than 57 Gray (Gy; or 95% of the prescribed dose), and 50% of the Rectum and Bladder volumes are to receive less than 38 Gy (or 63% of the prescribed dose). These objective metrics were used by three independent expert reviewers to establish the consensus ‘Quantitatively Superior’ RT plan between ML- and human-generated RT plans based on adherence to clinical practice guidelines. The dosimetric data shown (colourwash) corresponds to a ML-generated RT plan for one patient in the deployment phase.

Extended Data Fig. 6 Quantitative dosimetric results according to plan evaluation metrics from clinical practice guidelines.

Results for simulation (n = 50) and deployment (n = 50) phases for both ML- and human-generated RT plans for guideline criteria corresponding to each target and organ. Colored box plots display the median (central bar) and mean (‘x’) values with the interquartile range (IQR; lower and upper hinge), and the whiskers define the 1.5xIQR; outliers are defined as any point that exceeds 1.5xIQR and represented with a circle. Due to small number of cases in which optional Small and Large Bowel were delineated, the individual data points are depicted with a hollow circle. Statistical equivalency between ML- and human-generated RT plans in the simulation and deployment phases was assessed using independent two-one-sided t-tests (TOST), with asterisk (*) denoting the distributions found to be not equivalent. The overall performance of the specialized RT planning therapists, as measured by quantitative metrics, did not change between simulation and deployment despite the introduction of a potential replacement technology, except for the maximum dose to the penile bulb (p = 0.161). The penile bulb RT treatment planning objective, which also increased for the ML RT plans between simulation and deployment (p = 0.196), is considered acceptable if below the 60 Gy objective and rarely drives clinical decisions. The quantitative metrics for ML RT plans were equivalent between simulation and deployment except for the rectum 30% volume < 47 Gray (Gy) objective (p = 0.092), although the mean value for both simulation (34.2 Gy) and deployment (37.6 Gy) were both well below the objective and lower than the mean value for the human RT plans (40.0 Gy and 42.6 Gy, respectively). Note: no statistical test for equivalence was performed for Small Bowel and Large Bowel due to insufficient number of cases in which these optional organs were delineated.

Extended Data Fig. 7 Example clinical presentations for scoring quantitatively superior RT plans.

Axial CT images of pelvis showing target and relevant organs of example cases from the deployment phase. Left: a quantitatively superior ML RT plan selected by the treating physician in which the ML RT plan had lower rectum and bladder doses compared with the human RT plan of 26.4% and 11.7%, respectively. Middle: quantitatively inferior human RT plan selected in which the ML plan had lower rectum and bladder doses compared with the human RT plan of 2.8% and 3.5%, respectively. Right: quantitatively inferior ML RT plan selected, in which the ML RT plan had higher rectum dose compared with the human RT plan of 14.7% but a lower bladder dose of 8.0%. Overall, in the deployment phase, 24/26 (92%) of ML RT plans selected by the treating physician were also quantitatively superior, while 7/17 (41%) of the selected human RT plans were quantitatively superior (not shown).

Extended Data Fig. 8 Distribution of atlas distances.

Atlas distances, an indication of similarity between the novel patient and training set atlases were compared between simulation (n = 50) and deployment (n = 50) phases of the study. Three atlas distances are automatically generated by the ML treatment planning process. a, The ‘spatial distance’ is calculated based on the spatial dose distribution and dose-volume frequency distribution over the entire patient. b, The ‘prior distance’ combines the dose-per-voxel probability distribution with dose-volume frequency distributions for each target and organ. c, The ‘dose mimicking distance’ is derived from the per-voxel magnitude adjustment necessary to create the final clinically deliverable RT plan from the predicted dose. The three atlas distances were not different between the simulation and deployment phases of the study. This suggests, from an ML perspective, that the patients in the two study phases were similarly comparable to the patient training database for ML-generated RT plans between the two phases.

Supplementary information

Supplementary Information

Supplementary Fig. 1.

Rights and permissions

About this article

Cite this article

McIntosh, C., Conroy, L., Tjong, M.C. et al. Clinical integration of machine learning for curative-intent radiation treatment of patients with prostate cancer. Nat Med 27, 999–1005 (2021). https://doi.org/10.1038/s41591-021-01359-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41591-021-01359-w

This article is cited by

-

Artificial intelligence applications in prostate cancer

Prostate Cancer and Prostatic Diseases (2024)

-

Circulating tumor nucleic acids: biology, release mechanisms, and clinical relevance

Molecular Cancer (2023)

-

Optimized glycemic control of type 2 diabetes with reinforcement learning: a proof-of-concept trial

Nature Medicine (2023)

-

Translation of AI into oncology clinical practice

Oncogene (2023)

-

Harnessing progress in radiotherapy for global cancer control

Nature Cancer (2023)