Abstract

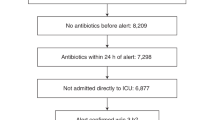

Machine learning-based clinical decision support tools for sepsis create opportunities to identify at-risk patients and initiate treatments at early time points, which is critical for improving sepsis outcomes. In view of the increasing use of such systems, better understanding of how they are adopted and used by healthcare providers is needed. Here, we analyzed provider interactions with a sepsis early detection tool (Targeted Real-time Early Warning System), which was deployed at five hospitals over a 2-year period. Among 9,805 retrospectively identified sepsis cases, the early detection tool achieved high sensitivity (82% of sepsis cases were identified) and a high rate of adoption: 89% of all alerts by the system were evaluated by a physician or advanced practice provider and 38% of evaluated alerts were confirmed by a provider. Adjusting for patient presentation and severity, patients with sepsis whose alert was confirmed by a provider within 3 h had a 1.85-h (95% CI 1.66–2.00) reduction in median time to first antibiotic order compared to patients with sepsis whose alert was either dismissed, confirmed more than 3 h after the alert or never addressed in the system. Finally, we found that emergency department providers and providers who had previous interactions with an alert were more likely to interact with alerts, as well as to confirm alerts on retrospectively identified patients with sepsis. Beyond efforts to improve the performance of early warning systems, efforts to improve adoption are essential to their clinical impact and should focus on understanding providers’ knowledge of, experience with and attitudes toward such systems.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data are not publicly available because they are from electronic health records approved for limited use by Johns Hopkins University investigators. Making the data publicly available without additional consent, ethical or legal approval might compromise patients’ privacy and the original ethical approval. To perform additional analyses using these data, researchers should contact A.W.W. or S.S. to apply for an institutional review board-approved research collaboration and obtain an appropriate data-use agreement.

Code availability

The TREWS early warning system described in this study is available from Bayesian Health. The underlying source code is proprietary intellectual property and is not available. Code for the primary statistical analyses can be found at https://github.com/royadams/henry_et_al_2022_code.

References

Bates, D. W., Saria, S., Ohno-Machado, L., Shah, A. & Escobar, G. Big data in health care: using analytics to identify and manage high-risk and high-cost patients. Health Aff. 33, 1123–1131 (2014).

Topol, E. J. High-performance medicine: the convergence of human and artificial intelligence. Nat. Med. 25, 44–56 (2019).

Liang, H. et al. Evaluation and accurate diagnoses of pediatric diseases using artificial intelligence. Nat. Med. 24, 443–448 (2019).

Bauer, M. et al. Automation to optimise physician treatment of individual patients: examples in psychiatry. Lancet Psychiat. 6, 338–349 (2019).

Beam, A. L. & Kohane, I. S. Big data and machine learning in health care. JAMA 319, 1317–1318 (2019).

Castaneda, C. et al. Clinical decision support systems for improving diagnostic accuracy and achieving precision medicine. J. Clin. Bioinforma. https://doi.org/10.1186/s13336-015-0019-3 (2015).

Henry, K. E., Hager, D. N., Pronovost, P. J. & Saria, S. A targeted real-time early warning score (TREWScore) for septic shock. Sci. Transl. Med. 7, 299ra122 (2015).

Churpek, M. M., Adhikari, R. & Edelson, D. P. The value of vital sign trends for detecting clinical deterioration on the wards. Resuscitation 102, 1–5 (2016).

Amland, R. C. & Sutariya, B. B. Quick sequential (sepsis-related) organ failure assessment (qSOFA) and St John sepsis surveillance agent to detect patients at risk of sepsis: an observational cohort study. Am. J. Med. Qual. 33, 50–57 (2018).

Tomašev, N. et al. A clinically applicable approach to continuous prediction of future acute kidney injury. Nature 572, 116–119 (2019).

Sittig, D. F. et al. Grand challenges in clinical decision support. J. Biomed. Inform. 41, 387–392 (2008).

Abramoff, M. D., Tobey, D. & Char, D. S. Lessons learned about autonomous AI: finding a safe, efficacious, and ethical path through the development process. Am. J. Ophthalmol. 214, 134–142 (2020).

Khairat, S. et al. Reasons for physicians not adopting clinical decision support systems: critical analysis. JMIR Med. Inform. https://doi.org/10.2196/medinform.8912 (2018).

Kelly, C. J., Karthikesalingam, A., Suleyman, M., Corrado, G. & King, D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. 17, 1–9 (2019).

Celi, L. A., Fine, B. & Stone, D. J. An awakening in medicine: the partnership of humanity and intelligent machines. Lancet Digit. Health 1, e255–e257 (2019).

Mertz, L. From Annoying to appreciated: turning clinical decision support systems into a medical professional’s best friend. IEEE Pulse 6, 4–9 (2015).

Greenes, R. A. et al. Clinical decision support models and frameworks: seeking to address research issues underlying implementation successes and failures. J. Biomed. Inform. 78, 134–143 (2018).

Wright, A. et al. Analysis of clinical decision support system malfunctions: a case series and survey. J. Am. Med. Inform. Assoc. 23, 1068–1076 (2016).

Ruppel, H. & Liu, V. To catch a killer: electronic sepsis alert tools reaching a fever pitch? BMJ Qual. Saf. https://doi.org/10.1136/bmjqs-2019-009463 (2019).

Downing, N. L. et al. Electronic health record-based clinical decision support alert for severe sepsis: a randomised evaluation. BMJ Qual. Saf. 28, 762–768 (2019).

Giannini, H. M. et al. A machine learning algorithm to predict severe sepsis and septic shock. Crit. Care Med. https://doi.org/10.1097/ccm.0000000000003891 (2019).

Moja, L. et al. Effectiveness of a hospital-based computerized decision support system on clinician recommendations and patient outcomes: a randomized clinical trial. JAMA Netw. Open 2, 1–16 (2019).

Khan, S. et al. Improving provider adoption with adaptive clinical decision support surveillance: an observational study. JMIR Hum. Factors 6, 1–10 (2019).

Mann, D. et al. Impact of clinical decision support on antibiotic prescribing for acute respiratory infections: a cluster randomized implementation trial. J. Gen. Intern. Med. 35, 788–795 (2020).

Kwan, J. L. et al. Computerised clinical decision support systems and absolute improvements in care: meta-analysis of controlled clinical trials. BMJ https://doi.org/10.1136/bmj.m3216 (2020).

Escobar, G. J. et al. Automated identification of adults at risk for in-hospital clinical deterioration. N. Engl. J. Med. 383, 1951–1960 (2020).

Schaefer, K. E., Chen, J. Y. C., Szalma, J. L. & Hancock, P. A. A meta-analysis of factors influencing the development of trust in automation: implications for understanding autonomy in future systems. Hum. Factors 58, 377–400 (2016).

Cai, C. J. et al. Human-centered tools for coping with imperfect algorithms during medical decision-making. Proc of the CHI Conference on Human Factors in Computing Systems, pp. 1–14 (2019).

Gaube, S. et al. Do as AI say: susceptibility in deployment of clinical decision-aids. NPJ Digit. Med. https://doi.org/10.1038/s41746-021-00385-9 (2021).

Jacobs, M. et al. Designing AI for Trust and Collaboration in Time-Constrained Medical Decisions: A Sociotechnical Lens. Proc of the Conference on Human Factors in Computing Systems (CHI), pp. 1–14 (2021).

Shortliffe, E. H. & Sepúlveda, M. J. Clinical decision support in the era of artificial intelligence. JAMA 10025, 9–10 (2018).

Kumar, A. et al. Duration of hypotension before initiation of effective antimicrobial therapy is the critical determinant of survival in human septic shock. Crit. Care Med. 34, 1589–1596 (2006).

Seymour, C. W. et al. Time to treatment and mortality during mandated emergency care for sepsis. N. Engl. J. Med. 376, 2235–2244 (2017).

Liu, V. X. et al. The timing of early antibiotics and hospital mortality in sepsis. Am. J. Respir. Crit. Care Med. 196, 856–863 (2017).

Adams, R. et al. Prospective, multi-site study of patient outcomes after implementation of the TREWS machine learning-based early warning system for sepsis. Nat. Med. https://doi.org/10.1038/s41591-022-01894-0 (2022).

Henry, K. E., Hager, D. N., Osborn, T. M., Wu, A. W. & Saria, S. Comparison of automated sepsis identification methods and electronic health record–based sepsis phenotyping: improving case identification accuracy by accounting for confounding comorbid conditions. Crit. Care Explor. https://doi.org/10.1097/cce.0000000000000053 (2019).

Saria, S. & Henry, K. E. Too many definitions of sepsis: can machine learning leverage the electronic health record to increase accuracy and bring consensus? Crit. Care Med. https://doi.org/10.1097/CCM.0000000000004144 (2020).

Singer, M. et al. The third international consensus definitions for sepsis and septic shock (sepsis-3). JAMA 315, 801–810 (2016).

Levy, M. M., Evans, L. E. & Rhodes, A. The surviving sepsis campaign bundle: 2018 update. Crit. Care Med. 46, 997–1000 (2018).

Hospital Inpatient - Specifications Manuals - Sepsis Resources. QualityNet https://qualitynet.cms.gov/inpatient/specifications-manuals/sepsis-resources. (2021)

Rhee, C. et al. Prevalence, underlying causes, and preventability of sepsis-associated mortality in US acute care hospitals. JAMA Netw. Open 2, e187571 (2019).

Adams, R. et al. 1405: assessing clinical use and performance of a machine learning sepsis alert for sex and racial bias. Crit. Care Med. 50, 705 (2022).

Evans, I. V. R. et al. Association between the New York sepsis care mandate and in-hospital mortality for pediatric sepsis. JAMA https://doi.org/10.1001/jama.2018.9071 (2018).

Guy, J. S., Jackson, E. & Perlin, J. B. Accelerating the clinical workflow using the sepsis prediction and optimization of therapy (SPOT) tool for real-time clinical monitoring. NEJM Catal. Innov. Care Deliv. https://doi.org/10.1056/CAT.19.1036 (2020).

Perlin, J. B. et al. 2019 John M. Eisenberg patient safety and quality awards: SPOTting sepsis to save lives: a nationwide computer algorithm for early detection of sepsis: innovation in patient safety and quality at the national level (Eisenberg award). Jt. Comm. J. Qual. Patient Saf. 46, 381–391 (2020).

McCoy, A. & Das, R. Reducing patient mortality, length of stay and readmissions through machine learning-based sepsis prediction in the emergency department, intensive care unit and hospital floor units. BMJ Open Qual. https://doi.org/10.1136/bmjoq-2017-000158 (2017).

Shimabukuro, D. W., Barton, C. W., Feldman, M. D., Mataraso, S. J. & Das, R. Effect of a machine learning-based severe sepsis prediction algorithm on patient survival and hospital length of stay: a randomised clinical trial. BMJ Open Respir. Res. https://doi.org/10.1136/bmjresp-2017-000234 (2017).

Wong, A. et al. External validation of a widely implemented proprietary sepsis prediction model in hospitalized patients. JAMA Intern. Med. 48109, 1–6 (2021).

Sendak, M. P. et al. Real-world integration of a sepsis deep learning technology into routine clinical care: implementation study. JMIR Med. Inform. 8, e15182 (2020).

Khairat, S., Marc, D., Crosby, W. & Al Sanousi, A. Reasons for physicians not adopting clinical decision support systems: critical analysis. JMIR Med. Informatics https://doi.org/10.2196/medinform.8912 (2018).

Kitzmiller, R. R. et al. Diffusing an innovation: clinician perceptions of continuous predictive analytics monitoring in intensive care. Appl. Clin. Inform. https://doi.org/10.1055/s-0039-1688478 (2019).

Abramoff, M. D., Lavin, P. T., Birch, M., Shah, N. & Folk, J. C. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit. Med. https://doi.org/10.1038/s41746-018-0040-6 (2018).

Ginestra, J. C. et al. Clinician perception of a machine learning–based early warning system designed to predict severe sepsis and septic shock. Crit. Care Med. https://doi.org/10.1097/ccm.0000000000003803 (2019).

Topiwala, R., Patel, K., Twigg, J., Rhule, J. & Meisenberg, B. Retrospective observational study of the clinical performance characteristics of a machine learning approach to early sepsis identification. Crit. Care Explor. https://doi.org/10.1097/cce.0000000000000046 (2019).

Carspecken, C. W., Sharek, P. J., Longhurst, C. & Pageler, N. M. A clinical case of electronic health record drug alert fatigue: consequences for patient outcome. Pediatrics 131, e1970–e1973 (2013).

Bansal, G. et al. Beyond accuracy: the role of mental modelsin human–AI team performance. Proc. AAAI Conference on Human Computation and Crowdsourcing 7, 2–11. (2019).

Jacobs, M. et al. How machine learning recommendations influence clinician treatment selections: the example of the antidepressant selection. Transl. Psychiatry https://doi.org/10.1038/s41398-021-01224-x (2021).

Lee, J. D. & See, K. A. Trust in automation: designing for appropriate reliance. Hum. Factors 46, 50–80 (2004).

Hoff, K. A. & Bashir, M. Trust in automation: integrating empirical evidence on factors that infuence trust. Hum. Factors 57, 407–434 (2015).

Murphy, E. V. Clinical decision support: effectiveness in improving quality processes and clinical outcomes and factors that may influence success. Yale J. Biol. Med. 87, 187–197 (2014).

Mann, D. et al. Adaptive design of a clinical decision support tool: what the impact on utilization rates means for future CDS research. Digit. Heal. 5, 1–12 (2019).

Henry, K. E. et al. Human-machine teaming is key to AI adoption: clinicians’ experiences with a deployed machine learning system. NPJ Digit. Med. https://doi.org/10.1038/s41746-022-00597-7 (2022).

Rhee, C. et al. Infectious Diseases Society of America position paper: recommended revisions to the national severe sepsis and septic shock early management bundle (SEP-1) sepsis quality measure. Clin. Infect. Dis. 72, 541–552 (2021).

Rhee, C. et al. Diagnosing sepsis is subjective and highly variable: a survey of intensivists using case vignettes. Crit. Care 20, 1–8 (2016).

Rhee, C., Dantes, R. B., Epstein, L. & Klompas, M. Using objective clinical data to track progress on preventing and treating sepsis: CDC’s new ‘Adult Sepsis Event’ surveillance strategy. BMJ Qual. Saf. https://doi.org/10.1002/sca.20201 (2018).

Rhee, C. et al. Sepsis surveillance using adult sepsis events simplified eSOFA criteria versus sepsis-3 sequential organ failure assessment criteria∗. Crit. Care Med. 47, 307–314 (2019).

Jordan, M. I. & Jacobs, R. A. Hierarchical mixtures of experts and the EM algorithm. Neural Comput. 6, 181–214 (1994).

Soleimani, H., Hensman, J. & Saria, S. Scalable joint models for reliable uncertainty-aware event prediction. IEEE Trans. Pattern Anal. Mach. Intell. 40, 1948–1963 (2018).

Schulam, P. & Saria, S. Can you trust this prediction? Auditing pointwise reliability subsequent to training. In Proc. of the 22nd International Conference on Artificial Intelligence and Statistics, 89:1022-1031 (2019).

Pierson, E., Cutler, D. M., Leskovec, J., Mullainathan, S. & Obermeyer, Z. An algorithmic approach to reducing unexplained pain disparities in underserved populations. Nat. Med. 27, 136–140 (2021).

Subbaswamy, A. & Saria, S. From development to deployment: dataset shift, causality, and shift-stable models in health AI. Biostatistics 21, 345–352 (2020).

Finlayson, S. G. et al. The clinician and dataset shift in artificial intelligence. N. Engl. J. Med. 385, 283 (2021).

Subbaswamy, A., Schulam, P. & Saria, S. Preventing failures due to dataset shift: learning predictive models that transport. In Proc. of the International Conference on Artificial Intelligence and Statistics, PMLR 89:3118-3127 (2019).

Henry, K., Wongvibulsin, S., Zhan, A., Saria, S. & Hager, D. Can septic shock be identified early? Evaluating performance of a targeted real-time early warning score (TREW score) for septic shock in a community hospital: global and subpopulation performance. Am. J. Respir. Crit. Care Med. 195, 7016 (2017).

Peltan, I. D. et al. ED door-to-antibiotic time and long-term mortality in sepsis. Chest 155, 938–946 (2019).

Vincent, J. L. et al. The SOFA (sepsis-related organ failure assessment) score to describe organ dysfunction/failure. Intensive Care Med. 22, 707–710 (1996).

Knaus, W. A., Draper, E. A., Wagner, D. P. & Zimmerman, J. E. APACHE II: a severity of disease classification system. Crit. Care Med. 13, 818–829 (1985).

Metcalfe, D. et al. Charlson and Elixhauser coding mapping. BMC Med. Res. Methodol. 19, 1–9 (2019).

Seabold, S. & Perktold, J. statsmodels: econometric and statistical modeling with Python. Proc. 9th Python in Science Conference (2010).

Norton, E. C., Miller, M. M. & Kleinman, L. C. Computing adjusted risk ratios and risk differences in Stata. Stata J. 13, 492–509 (2013).

Acknowledgements

The authors thank Y. Ahmad, A. Zhang, M. Yeo and Y. Karklin whose work significantly contributed to early iterations of the development of the deployed system. We also thank R. Demski, K. D’Souza, A. Kachalia, A. Chen and clinical and quality stakeholders who contributed to tool deployment, education and championing the work. The authors gratefully acknowledge the following sources of funding: the Gordon and Betty Moore Foundation (award 3926), the National Science Foundation Future of Work at the Human-technology Frontier (award 1840088) and the Alfred P. Sloan Foundation research fellowship (2018). This information or content and conclusions are those of the authors and should not be construed as the official position or policy of, nor should any endorsements be inferred by the NSF the US Government.

Author information

Authors and Affiliations

Contributions

K.E.H., R.A., C.P., E.S.C., A.W.W. and S.S. contributed to the initial study design and preliminary analysis plan. S.S. led the development and deployment efforts for the TREWS software. K.E.H., H.S., A.S., R.C.L., L.J., M.H., S.M., D.N.H., A.W.W. and S.S. contributed to the system development and deployment. K.E.H., R.A., C.P., E.Y.K., S.E.C., A.R.C., E.S.C., D.N.H., A.W.W. and S.S. contributed to the review and analysis of the results. All authors contributed to the final preparation of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

Under a license agreement between Bayesian Health and the Johns Hopkins University, K.E.H., S.S. and Johns Hopkins University are entitled to revenue distributions. Additionally, the University owns equity in Bayesian Health. This arrangement has been reviewed and approved by the Johns Hopkins University in accordance with its conflict-of-interest policies. S.S. also has grants from Gordon and Betty Moore Foundation, the National Science Foundation, the National Institutes of Health, Defense Advanced Research Projects Agency, the Food and Drug Administration and the American Heart Association; she is a founder of and holds equity in Bayesian Health; she is the scientific advisory board member for PatientPing; and she has received honoraria for talks from a number of biotechnology, research and health-tech companies. This arrangement has been reviewed and approved by the Johns Hopkins University in accordance with its conflict-of-interest policies. D.N.H. discloses salary support and funding to his institution from the Marcus Foundation for the conduct of the vitamin C, thiamine and steroids in sepsis trial. S.E.C. declares consulting fees from Basilea for work on an infection adjudication committee for an S. aureus bacteremia trial. The other authors declare no competing interests.

Peer review

Peer review information

Nature Medicine thanks Derek Angus, Melanie Wright and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling editor: Michael Basson, in collaboration with the Nature Medicine team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Retrospective predictive performance of the TREWS model.

Performance of the TREWS model on retrospective data. Figure (a) shows the receiver operating characteristic curve and Figure (b) shows the sensitivity–PPV curve (also referred to as the precision-recall curve).

Extended Data Fig. 2 Annotated screenshot of the TREWS interface.

Annotated screenshot of the TREWS provider evaluation page. Annotations show the main provider actions: reviewing the alert explanation, indicating whether the patient has a suspected source of infection and reviewing sources of organ dysfunction.

Supplementary information

Rights and permissions

About this article

Cite this article

Henry, K.E., Adams, R., Parent, C. et al. Factors driving provider adoption of the TREWS machine learning-based early warning system and its effects on sepsis treatment timing. Nat Med 28, 1447–1454 (2022). https://doi.org/10.1038/s41591-022-01895-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41591-022-01895-z

This article is cited by

-

Use of artificial intelligence in critical care: opportunities and obstacles

Critical Care (2024)

-

The Opportunities and Challenges for Artificial Intelligence to Improve Sepsis Outcomes in the Paediatric Intensive Care Unit

Current Infectious Disease Reports (2023)

-

Harnessing AI in sepsis care

Nature Medicine (2022)

-

Human–machine teaming is key to AI adoption: clinicians’ experiences with a deployed machine learning system

npj Digital Medicine (2022)

-

Prospective, multi-site study of patient outcomes after implementation of the TREWS machine learning-based early warning system for sepsis

Nature Medicine (2022)